Computing

Thinking in Silicon

Microchips modeled on the brain may excel at tasks that baffle today’s computers.

Picture a person reading these words on a laptop in a coffee shop. The machine made of metal, plastic, and silicon consumes about 50 watts of power as it translates bits of information—a long string of 1s and 0s—into a pattern of dots on a screen. Meanwhile, inside that person’s skull, a gooey clump of proteins, salt, and water uses a fraction of that power not only to recognize those patterns as letters, words, and sentences but to recognize the song playing on the radio.

Computers are incredibly inefficient at lots of tasks that are easy for even the simplest brains, such as recognizing images and navigating in unfamiliar spaces. Machines found in research labs or vast data centers can perform such tasks, but they are huge and energy-hungry, and they need specialized programming. Google recently made headlines with software that can reliably recognize cats and human faces in video clips, but this achievement required no fewer than 16,000 powerful processors.

A new breed of computer chips that operate more like the brain may be about to narrow the gulf between artificial and natural computation—between circuits that crunch through logical operations at blistering speed and a mechanism honed by evolution to process and act on sensory input from the real world. Advances in neuroscience and chip technology have made it practical to build devices that, on a small scale at least, process data the way a mammalian brain does. These “neuromorphic” chips may be the missing piece of many promising but unfinished projects in artificial intelligence, such as cars that drive themselves reliably in all conditions, and smartphones that act as competent conversational assistants.

“Modern computers are inherited from calculators, good for crunching numbers,” says Dharmendra Modha, a senior researcher at IBM Research in Almaden, California. “Brains evolved in the real world.” Modha leads one of two groups that have built computer chips with a basic architecture copied from the mammalian brain under a $100 million project called Synapse, funded by the Pentagon’s Defense Advanced Research Projects Agency.

The prototypes have already shown early sparks of intelligence, processing images very efficiently and gaining new skills in a way that resembles biological learning. IBM has created tools to let software engineers program these brain-inspired chips; the other prototype, at HRL Laboratories in Malibu, California, will soon be installed inside a tiny robotic aircraft, from which it will learn to recognize its surroundings.

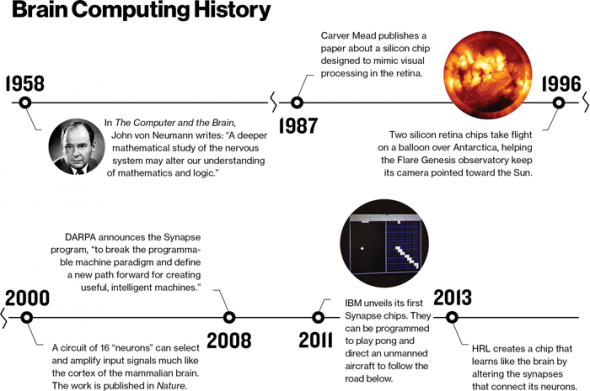

The evolution of brain-inspired chips began in the early 1980s with Carver Mead, a professor at the California Institute of Technology and one of the fathers of modern computing. Mead had made his name by helping to develop a way of designing computer chips called very large scale integration, or VLSI, which enabled manufacturers to create much more complex microprocessors. This triggered explosive growth in computation power: computers looked set to become mainstream, even ubiquitous. But the industry seemed happy to build them around one blueprint, dating from 1945. The von Neumann architecture, named after the Hungarian-born mathematician John von Neumann, is designed to execute linear sequences of instructions. All today’s computers, from smartphones to supercomputers, have just two main components: a central processing unit, or CPU, to manipulate data, and a block of random access memory, or RAM, to store the data and the instructions on how to manipulate it. The CPU begins by fetching its first instruction from memory, followed by the data needed to execute it; after the instruction is performed, the result is sent back to memory and the cycle repeats. Even multicore chips that handle data in parallel are limited to just a few simultaneous linear processes.

That approach developed naturally from theoretical math and logic, where problems are solved with linear chains of reasoning. Yet it was unsuitable for processing and learning from large amounts of data, especially sensory input such as images or sound. It also came with built-in limitations: to make computers more powerful, the industry had tasked itself with building increasingly complex chips capable of carrying out sequential operations faster and faster, but this put engineers on course for major efficiency and cooling problems, because speedier chips produce more waste heat. Mead, now 79 and a professor emeritus, sensed even then that there could be a better way. “The more I thought about it, the more it felt awkward,” he says, sitting in the office he retains at Caltech. He began dreaming of chips that processed many instructions—perhaps millions—in parallel. Such a chip could accomplish new tasks, efficiently handling large quantities of unstructured information such as video or sound. It could be more compact and use power more efficiently, even if it were more specialized for particular kinds of tasks. Evidence that this was possible could be found flying, scampering, and walking all around. “The only examples we had of a massively parallel thing were in the brains of animals,” says Mead.

Brains compute in parallel as the electrically active cells inside them, called neurons, operate simultaneously and unceasingly. Bound into intricate networks by threadlike appendages, neurons influence one another’s electrical pulses via connections called synapses. When information flows through a brain, it processes data as a fusillade of spikes that spread through its neurons and synapses. You recognize the words in this paragraph, for example, thanks to a particular pattern of electrical activity in your brain triggered by input from your eyes. Crucially, neural hardware is also flexible: new input can cause synapses to adjust so as to give some neurons more or less influence over others, a process that underpins learning. In computing terms, it’s a massively parallel system that can reprogram itself.

Ironically, though he inspired the conventional designs that endure today, von Neumann had also sensed the potential of brain-inspired computing. In the unfinished book The Computer and the Brain, published a year after his death in 1957, he marveled at the size, efficiency, and power of brains compared with computers. “Deeper mathematical study of the nervous system … may alter the way we look on mathematics and logic,” he argued. When Mead came to the same realization more than two decades later, he found that no one had tried making a computer inspired by the brain. “Nobody at that time was thinking, ‘How do I build one?’” says Mead. “We had no clue how it worked.”

Mead finally built his first neuromorphic chips, as he christened his brain-inspired devices, in the mid-1980s, after collaborating with neuroscientists to study how neurons process data. By operating ordinary transistors at unusually low voltages, he could arrange them into feedback networks that looked very different from collections of neurons but functioned in a similar way. He used that trick to emulate the data-processing circuits in the retina and cochlea, building chips that performed tricks like detecting the edges of objects and features in an audio signal. But the chips were difficult to work with, and the effort was limited by chip-making technology. With neuromorphic computing still just a curiosity, Mead moved on to other projects. “It was harder than I thought going in,” he reflects. “A fly’s brain doesn’t look that complicated, but it does stuff that we to this day can’t do. That’s telling you something.”

Neurons Inside

IBM’s Almaden lab, near San Jose, sits close to but apart from Silicon Valley—perhaps the ideal location from which to rethink the computing industry’s foundations. Getting there involves driving to a magnolia-lined street at the city’s edge and climbing up two miles of curves. The lab sits amid 2,317 protected acres of rolling hills. Inside, researchers pace long, wide, quiet corridors and mull over problems. Here, Modha leads the larger of the two teams DARPA recruited to break the computing industry’s von Neumann dependency. The basic approach is similar to Mead’s: build silicon chips with elements that operate like neurons. But he has the benefit of advances in neuroscience and chip making. “Timing is everything; it wasn’t quite right for Carver,” says Modha, who has a habit of closing his eyes to think, breathe, and reflect before speaking.

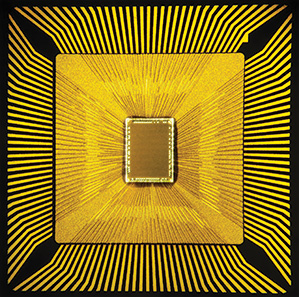

IBM makes neuromorphic chips by using collections of 6,000 transistors to emulate the electrical spiking behavior of a neuron and then wiring those silicon neurons together. Modha’s strategy for combining them to build a brainlike system is inspired by studies on the cortex of the brain, the wrinkly outer layer. Although different parts of the cortex have different functions, such as controlling language or movement, they are all made up of so-called microcolumns, repeating clumps of 100 to 250 neurons. Modha unveiled his version of a microcolumn in 2011. A speck of silicon little bigger than a pinhead, it contained 256 silicon neurons and a block of memory that defines the properties of up to 262,000 synaptic connections between them. Programming those synapses correctly can create a network that processes and reacts to information much as the neurons of a real brain do.

Setting that chip to work on a problem involves programming a simulation of the chip on a conventional computer and then transferring the configuration to the real chip. In one experiment, the chip could recognize handwritten digits from 0 to 9, even predicting which number someone was starting to trace with a digital stylus. In another, the chip’s network was programmed to play a version of the video game Pong. In a third, it directed a small unmanned aerial vehicle to follow the double yellow line on the road approaching IBM’s lab. None of these feats are beyond the reach of conventional software, but they were achieved using a fraction of the code, power, and hardware that would normally be required.

Modha is testing early versions of a more complex chip, made from a grid of neurosynaptic cores tiled into a kind of rudimentary cortex—over a million neurons altogether. Last summer, IBM also announced a neuromorphic programming architecture based on modular blocks of code called corelets. The intention is for programmers to combine and tweak corelets from a preëxisting menu, to save them from wrestling with silicon synapses and neurons. Over 150 corelets have already been designed, for tasks ranging from recognizing people in videos to distinguishing the music of Beethoven and Bach.

Learning Machines

On another California hillside 300 miles to the south, the other part of DARPA’s project aims to make chips that mimic brains even more closely. HRL, which looks out over Malibu from the foothills of the Santa Monica Mountains, was founded by Hughes Aircraft and now operates as a joint venture of General Motors and Boeing. With a koi pond, palm trees, and banana plants, the entrance resembles a hotel from Hollywood’s golden era. It also boasts a plaque commemorating the first working laser, built in 1960 at what was then called Hughes Research Labs.

On a bench in a windowless lab, Narayan Srinivasa’s chip sits at the center of a tangle of wires. The activity of its 576 artificial neurons appears on a computer screen as a parade of spikes, an EEG for a silicon brain. The HRL chip has neurons and synapses much like IBM’s. But like the neurons in your own brain, those on HRL’s chip adjust their synaptic connections when exposed to new data. In other words, the chip learns through experience.

The HRL chip mimics two learning phenomena in brains. One is that neurons become more or less sensitive to signals from another neuron depending on how frequently those signals arrive. The other is more complex: a process believed to support learning and memory, known as spike-timing-dependent plasticity. This causes neurons to become more responsive to other neurons that have tended to closely match their own signaling activity in the past. If groups of neurons are working together constructively, the connections between them strengthen, while less useful connections fall dormant.

Results from experiments with simulated versions of the chip are impressive. The chip played a virtual game of Pong, just as IBM’s chip did. But unlike IBM’s chip, HRL’s wasn’t programmed to play the game—only to move its paddle, sense the ball, and receive feedback that either rewarded a successful shot or punished a miss. A system of 120 neurons started out flailing, but within about five rounds it had become a skilled player. “You don’t program it,” Srinivasa says. “You just say ‘Good job,’ ‘Bad job,’ and it figures out what it should be doing.” If extra balls, paddles, or opponents are added, the network quickly adapts to the changes.

This approach might eventually let engineers create a robot that stumbles through a kind of “childhood,” figuring out how to move around and navigate. “You can’t capture the richness of all the things that happen in the real-world environment, so you should make the system deal with it directly,” says Srinivasa. Identical machines could then incorporate whatever the original one has learned. But leaving robots some ability to learn after that point could also be useful. That way they could adapt if damaged, or adjust their gait to different kinds of terrain.

The first real test of this vision for neuromorphic computing will come next summer, when the HRL chip is scheduled to escape its lab bench and take flight in a palm-sized aircraft with flapping wings, called a Snipe. As a human remotely pilots the craft through a series of rooms, the chip will take in data from the craft’s camera and other sensors. At some point the chip will be given a signal that means “Pay attention here.” The next time the Snipe visits that room, the chip should turn on a light to signal that it remembers. Performing this kind of recognition would normally require too much electrical and computing power for such a small craft.

Alien Intelligence

Despite the Synapse chips’ modest but significant successes, it is still unclear whether scaling up these chips will produce machines with more sophisticated brainlike faculties. And some critics doubt it will ever be possible for engineers to copy biology closely enough to capture these abilities.

Neuroscientist Henry Markram, who discovered spike-timing-dependent plasticity, has attacked Modha’s work on networks of simulated neurons, saying their behavior is too simplistic. He believes that successfully emulating the brain’s faculties requires copying synapses down to the molecular scale; the behavior of neurons is influenced by the interactions of dozens of ion channels and thousands of proteins, he notes, and there are numerous types of synapses, all of which behave in nonlinear, or chaotic, ways. In Markram’s view, capturing the capabilities of a real brain would require scientists to incorporate all those features.

The DARPA teams counter that they don’t have to capture the full complexity of brains to get useful things done, and that successive generations of their chips can be expected to come closer to representing biology. HRL hopes to improve its chips by enabling the silicon neurons to regulate their own firing rate as those in brains do, and IBM is wiring the connections between cores on its latest neuromorphic chip in a new way, using insights from simulations of the connections between different regions of the cortex of a macaque.

Modha believes these connections could be important to higher-level brain functioning. Yet even after such improvements, these chips will still be far from the messy, complex reality of brains. It seems unlikely that microchips will ever match brains in fitting 10 billion synaptic connections into a single square centimeter, even though HRL is experimenting with a denser form of memory based on exotic devices known as memristors.

At the same time, neuromorphic designs are still far removed from most computers we have today. Perhaps it is better to recognize these chips as something entirely apart—a new, alien form of intelligence.

They may be alien, but IBM’s head of research strategy, Zachary Lemnios, predicts that we’ll want to get familiar with them soon enough. Many large businesses already feel the need for a new kind of computational intelligence, he says: “The traditional approach is to add more computational capability and stronger algorithms, but that just doesn’t scale, and we’re seeing that.” As examples, he cites Apple’s Siri personal assistant and Google’s self–driving cars. These technologies are not very sophisticated in how they understand the world around them, Lemnios says; Google’s cars rely heavily on preloaded map data to navigate, while Siri taps into distant cloud servers for voice recognition and language processing, causing noticeable delays.

Today the cutting edge of artificial–intelligence software is a discipline known as “deep learning,” embraced by Google and Facebook, among others. It involves using software to simulate networks of very basic neurons on normal computer architecture (see “10 Breakthrough Technologies: Deep Learning,” May/June 2013). But that approach, which produced Google’s cat-spotting software, relies on vast clusters of computers to run the simulated neural networks and feed them data. Neuromorphic machines should allow such faculties to be packaged into compact, efficient devices for situations in which it’s impractical to connect to a distant data center. IBM is already talking with clients interested in using neuromorphic systems. Security video processing and financial fraud prediction are at the front of the line, as both require complex learning and real-time pattern recognition.

Whenever and however neuromorphic chips are finally used, it will most likely be in collaboration with von Neumann machines. Numbers will still need to be crunched, and even in systems faced with problems such as analyzing images, it will be easier and more efficient to have a conventional computer in command. Neuromorphic chips could then be used for particular tasks, just as a brain relies on different regions specialized to perform different jobs.

As has usually been the case throughout the history of computing, the first such systems will probably be deployed in the service of the U.S. military. “It’s not mystical or magical,” Gill Pratt, who manages the Synapse project at DARPA, says of neuromorphic computing. “It’s an architectural difference that leads to a different trade-off between energy and performance.” Pratt says that UAVs, in particular, could use the approach. Neuromorphic chips could recognize landmarks or targets without the bulky data transfers and powerful conventional computers now needed to process imagery. “Rather than sending video of a bunch of guys, it would say, ‘There’s a person in each of these positions—it looks like they’re running,’” he says.

This vision of a new kind of computer chip is one that both Mead and von Neumann would surely recognize.