It’s pretty much just simple energy loss that causes heat build-up in electronics. That ostensibly innocuous warming up, though, causes a two-fold problem:

Firstly, the loss of energy, manifested as heat, reduces the machine’s computational power — much of the purposefully created and needed, high-power energy disappears into thin air instead of crunching numbers. And secondly, as data center managers know, to add insult to injury, it costs money to cool all that waste heat.

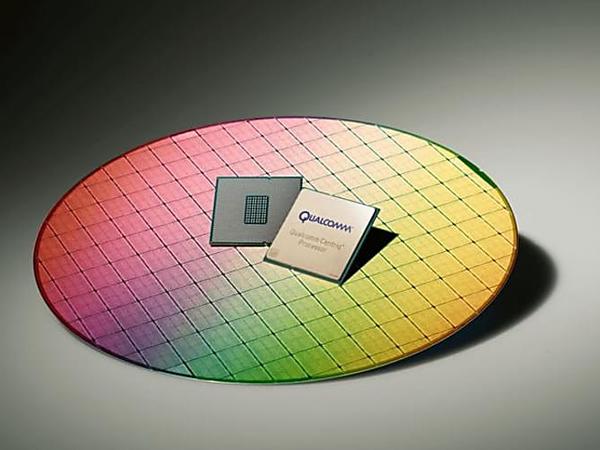

For both of those reasons (and some others, such as ecologically related ones, and equipment longevity—the tech breaks down with temperature), there’s an increasing effort underway to build computers in such a way that heat is eliminated — completely. Transistors, superconductors, and chip design are three areas where major conceptual breakthroughs were announced in 2018. They’re significant developments, and consequently it might not be too long before we see the ultimate in efficiency: the cold-running computer.

Room-temperature switching

“Common inefficiencies in transistor materials cause energy loss,” says the U.S. Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab), in a news article on its website this month. That “results in heat buildup and shorter battery life.”

The lab is proposing, and says it has successfully demonstrated, a material called sodium bismuthide (Na3Bi) to be used for a new kind of transistor design, which it says can “carry a charge with nearly zero loss at room temperature.” No heat, in other words. Transistors perform switching and other tasks required in electronics.

The new “exotic, ultrathin material” is a topological transistor. That means the material has unique tunable properties, the group, which includes scientists from Monash University in Australia, explains. It’s superconductor-like, they say, but unlike super-conductors, doesn’t need to be chilled. Superconductivity, found in some materials, is partly where electrical resistance becomes eliminated through extreme cooling.

“Packing more transistors into smaller devices is pushing toward the physical limits. Ultra-low energy topological electronics are a potential answer to the increasing challenge of energy wasted in modern computing,” the Berkeley Lab article says.

Electron spin with magnetic data

Another group of researchers from the University of Konstanz in Germany say supercomputers will be built without waste heat. That group is working on the transportation of electrons without heat production and is approaching it through a form of superconductivity.

“Magnetically encoded information can, in principle, be transported without heat production by using the magnetic properties of electrons, the electron spin,” they say in an article on the university’s website this month. “Spintronics,” as it’s called, is the electron’s inherent spin and is also related to magnetism. The science, roughly, uses another dimension in the electron, thus creating efficiency gains.

The problem has been, though, that the magnetism and a lossless flow of electrical current “are competing phenomena that cannot coexist,” the article. That issue is related to how pairs of electrons become non-magnetic in the process and, therefore, crucially can’t carry magnetically encoded information.

However, the researchers say that they have now figured how to do it. They bind pairs of electrons using “special magnetic materials” and superconductors. “Electrons with parallel spins can be bound to pairs carrying the supercurrent over longer distances through magnets,” the university's article says.

They say inherently non-heating superconducting spintronics might now be able to replace fundamentally hot semiconductor technology, in other words.

Cooling channels on the chip

And then the third breakthrough, and one that I wrote about in November, is where chip design is optimized better for cooling on the actual chips themselves.

"Spirals or mazes that coolant can travel through" should be embedded on the chip surface to cool instead of adhering heatsinks, say the inventors. Heatsinks are inefficient partly because of the needed thermal interface material.

Electronics could be kept cooler by 18 degrees F, and power use in data centers could be reduced by 5 percent, the spiral-cooling chip scientists at Binghamton University claim.

![[High Tech] - $99 mini drone takes the world by storm (see why) [High Tech] - $99 mini drone takes the world by storm (see why)](Computers%20could%20soon%20run%20cold,%20no%20heat%20generated%20%20%20Network%20World_files/eyJpdSI6IjU5NTMwYTViYjYyNWViNmI5YTRiMWMwMzA1OThhY2I0YjUyMWVm.jpg)