Quantum volume, a metric for measuring the computational ability of quantum computers, is gaining acceptance from Gartner.

Measuring the computational ability of quantum computers is—as is anything involving quantum systems—a complex problem. Counting the number of qubits in a quantum computer to determine computational power is too simplistic to be functionally useful—differences in how individual qubits are connected, how the qubits themselves are designed, and environmental factors make this type of comparison inequitable.

For example, D-Wave is planning to launch a 5,000-qubit system for cloud-based access in mid-2020. Google, for contrast, has a 72-qubit quantum computer called "Bristlecone" and IBM's Q System One is a 20-qubit design. Differences in how these qubits are designed and connected make cross-vendor comparisons unreliable—while D-Wave's upcoming 5,000-qubit system will undoubtedly be more capable than its current-generation 2,000-qubit system, it is not necessarily better than IBM's designs, or Google's prototypes. Further, D-Wave's design is a quantum annealer, useful for a single type of calculation called "quadratic unconstrained binary optimization (QUBO)."

SEE: Quantum computing: An insider's guide (free PDF) (TechRepublic)

In contrast, the IBM and Google designs are general-purpose quantum computers, and can be used for a wider variety of calculations, including integer factorization—a type of operation necessary to break RSA encryption. Various types of qubit designs exist in general-purpose quantum computers, including superconducting qubits, ion-trap systems, semiconductor-based and spin qubits.

A standard for measuring the computational ability of quantum computers was proposed by IBM in 2017, called "quantum volume." Quantum volume is measured by calculating the number of physical qubits, connectivity between qubits, and time to decoherence, as well as the available hardware gate set, and number of operations that can be run in parallel.

According to the researchers who defined quantum volume, that metric "enables the comparison of hardware with widely different performance characteristics and quantifies the complexity of algorithms that can be run." Likewise, the researchers noted that quantum volume can only increase if the number of qubits, and the error rate of those qubits, increase in parallel.

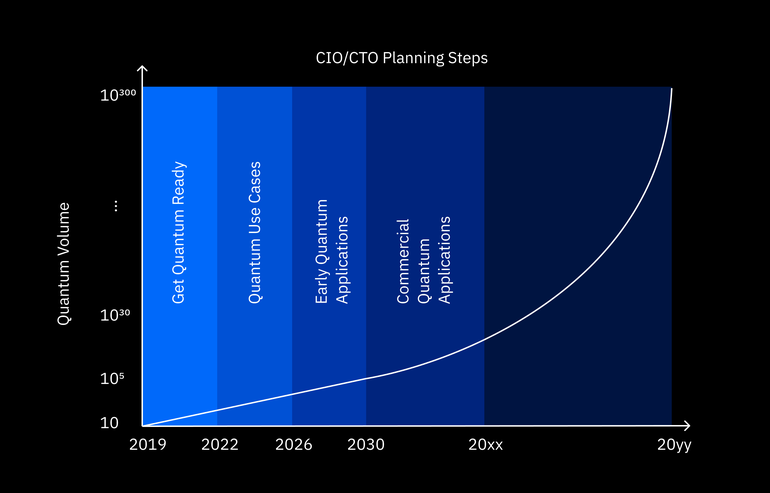

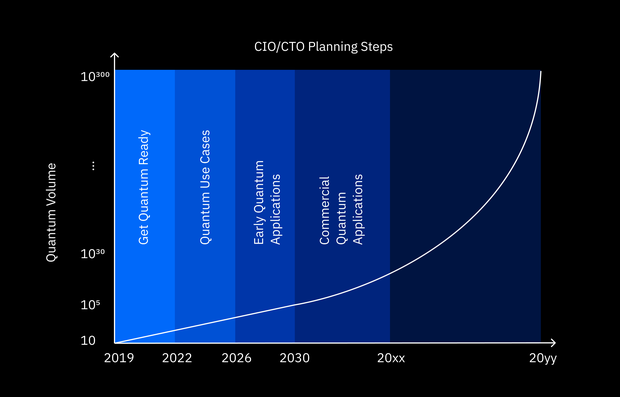

On Monday, at Gartner's Catalyst Conference in San Diego, the research and advisory firm embraced the quantum volume benchmark as an important way of measuring progress toward quantum advantage—the point at which quantum computers are capable of performing a calculation demonstrably faster than traditional computers—and noted the importance of quantum volume in planning for adoption of quantum computers.

quantumvolume.png

While it is presently unclear when quantum advantage will be achieved, Gartner projects evaluation of quantum use cases in the enterprise by 2022, with early quantum applications in deployment by 2026, and commercial use of quantum computing by 2030.

For more on quantum computing, check out "Why post-quantum encryption will be critical to protect current classical computers," "IBM reduces noise in quantum computing, increasing accuracy of calculations," "D-Wave's 2000Q variant reduces noise for cloud-based quantum computing," and "Quantum computing is not a cure-all for business computing challenges" on TechRepublic.

Also see

- Blockchain: A cheat sheet (TechRepublic)

- Microsoft HoloLens 2: An insider's guide (TechRepublic download)

- How smart tech is transforming the transportation industry (TechRepublic Premium)

- Technology that changed us: The 1970s, from Pong to Apollo (ZDNet)

- These smart plugs are the secret to a seamless smart home (CNET)

- The 10 most important iPhone apps of all time (Download.com)

- Tom Merrit's Top 5 series (TechRepublic on Flipboard)

istock-654209898.jpg